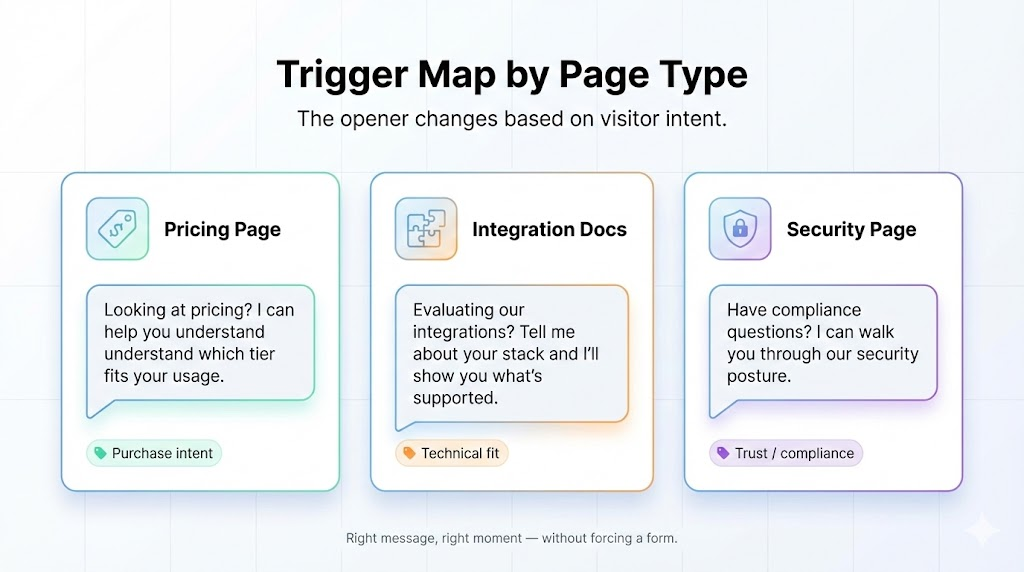

It's late evening. A buyer from a target account is on your pricing page, comparing you side-by-side with a competitor. They're trying to understand deployment complexity, security posture, and whether you fit their timeline.

No rep is involved yet. Your website is the only interface.

That's the modern B2B buying moment. And it's the exact moment where most chatbots quietly fail. Because most chatbots were built to route conversations, not support evaluation. They decide where a conversation goes, not whether it should progress toward a buying decision. In 2026, that difference matters.

Why Chatbots vs AI Agents Is Suddenly an Urgent Question

Until recently, the "chatbot vs. agent" question didn't exist. Chatbots were the only option. But LLM-based systems have made it possible to build agents that reason through scenarios, not just route visitors to forms or calendars. That shifts what's possible and what buyers now expect from website conversations.

B2B buying is long, non-linear, and multi-stakeholder. Gartner research shows the average B2B buying group includes 6-10 decision-makers, and buyers complete the majority of their research before engaging sales. Your website isn't just a lead capture surface anymore. It's where evaluation happens.

Buyers return multiple times. They ask "risk questions" early: integrations, security posture, compliance, pricing logic, deployment constraints, internal approvals.

When your website can't handle those evaluation-grade questions in the moment, the failure pattern is predictable:

- High-intent visitors bounce

- Pipeline gets noisier

- SDRs restart discovery from scratch

- Buyers repeat themselves across every interaction

The question isn't whether to have website conversations. It's whether those conversations can carry the weight of real evaluation.

B2B Chatbots: What They Are and What They’re Good At

A B2B chatbot is a conversational interface that:

- Engages visitors

- Answers predefined questions

- Captures lead information

- Routes to sales or support

Its primary job is orchestration, not selling or solutioning.

When chatbots work well

- Routing decisions ("Are you looking for sales or support?")

- Basic qualification gates (email, company name, role)

- FAQ deflection

- Meeting scheduling

Chatbots are also "safer" by design. They're bound. Limited freedom means fewer surprises. For high-volume, low-complexity interactions, that predictability is valuable.

The limitation

Chatbots determine where a conversation should go, not whether it should progress toward a buying decision. That's a significant gap in B2B, where evaluation questions are rarely single-intent. A buyer asking about "pricing" might actually be asking about scalability, commitment flexibility, or competitive positioning…all at once.

AI Agents: What They Are and How They Differ from Chatbots

An AI agent doesn't just answer questions. It runs a lightweight discovery process. It clarifies constraints, maps needs to capabilities, surfaces tradeoffs, and recommends a path forward.

Think of it as an early-stage AE or SE embedded in your website.

What this looks like in practice

A buyer asks: "We're a 150-person SaaS company with a PLG motion, EU customers, and a tiny RevOps team. Can you handle this without a 6-month implementation?"

That's not FAQ territory. That's scenario evaluation. A chatbot deflects it. An agent engages it.

A good agent:

- Asks clarifying questions to understand constraints

- Reasons across multiple factors (timeline, team size, compliance needs)

- Recommends the best-fit path or package

- Hands off to sales with context intact

This is an architectural upgrade, not a UI upgrade.

The Core Difference: Chatbots Route, AI Agents Reason

Chatbots route conversations.

Traditional chatbots optimize for control and predictability. They reduce variance by design: fixed flows, limited intent buckets, deterministic outcomes. This tradeoff works for routing. It fails for evaluation.

Where routing breaks down:

- Pricing tradeoffs ("What happens if we need to scale mid-contract?")

- Objections ("We tried something similar and it failed—why is this different?")

- Competitive comparisons ("How do you compare to [Competitor X] on security?")

- Timeline pressure ("We need to be live in 6 weeks—is that realistic?")

- Internal stakeholder concerns ("Our IT team will ask about SOC 2—what should I tell them?")

These are exactly the moments where buyer intent is highest. And exactly where chatbots deflect to forms or calendars.

Agents reason through evaluation.

Instead of deflecting complex questions, agents move the buyer closer to a decision:

- Turn messy, multi-part questions into crisp clarifying questions

- Map stated needs to specific capabilities

- Make tradeoffs explicit ("You mentioned timeline is critical—here's what that means for implementation scope")

- Recommend next steps with context intact

This matches what buyers now expect: answers, not navigation. They don't want to dig through pages or fill out forms to learn the basics.

.png)

Chatbot capability plateaus early. Agent capability extends across complexity ranges.

How AI Agents Work: The Architecture That Enables Reasoning

Agent reasoning is grounded in three layers:

- Knowledge base (structured, indexed): Product docs, pricing logic, competitive positioning—indexed so the agent can retrieve accurate information, not hallucinate

- Product schema (capabilities and integrations as connected facts): Not just "we integrate with Salesforce" but "we integrate with Salesforce, here's what syncs, here's what doesn't, here's how setup works"

- Visitor scenario (role, stack, constraints, urgency): Context captured during the conversation that shapes recommendations

This grounding is what separates useful agents from chatbots with better language models.

How AI Agents Work: The Architecture That Enables Reasoning

Here's the part your buyers and your security team will care about: agents increase blast radius if you don't constrain them.

These are specific risks you should assume will happen in production:

- Prompt injection: Users attempting to override instructions or extract information

- Sensitive information disclosure: Agent reveals pricing, roadmap, or customer details it shouldn't

- Excessive agency: Agent takes actions (CRM writes, meeting bookings) without appropriate checks

So your "agent vs. chatbot" decision isn't only about capability. It's about capability + control.

Why Governance Isn’t Optional for AI Agents

Agents can do more than chatbots, which means they can break more, too. Without guardrails, you're exposed to prompt injection, sensitive data disclosure, and uncontrolled CRM writes. Vendors who treat governance as an afterthought will cost you pipeline, trust, or both.

Governance Checklist: Questions to Ask Before You Deploy AI Agents

If you're evaluating agents, require evidence of:

What “Good” Looks Like in AI Agents: Docket as an Example

Docket's guardrails are architectural, not "please behave" prompts.

Their custom cognitive architecture splits the system into:

- Responder: The buyer-facing conversation layer

- Thinker: The deliberate reasoning and execution layer

The agent chatting with your buyer isn't the same system touching your CRM. Actions flow through a shared tool registry, which means the agent can only perform pre-approved operations (scoped actions, least privilege). You can log the plan and tool calls for audit and debugging.

That separation is the control point: risky requests can be clarified, refused, or escalated before anything gets written to systems of record.

How Chatbots vs AI Agents Play Out in Inbound Qualification

Chatbots vs AI Agents: Side by Side Comparison

How to Decide Between a Chatbot and an AI Agent

Pick a chatbot if:

- Your primary job is routing ("sales vs. support," "book a meeting")

- Questions are mostly FAQ-level

- Wrong answers are low-risk

- You need maximum predictability

- You don't have bandwidth for knowledge governance

Pick an agent if:

- Buyers ask scenario questions about stack, security, compliance, deployment, or pricing logic

- You need real qualification, not just email capture

- You care about CRM integrity (structured handoffs, clean routing)

- You want your website to behave like an answer engine

- You're ready to implement controls aligned with OWASP LLM risks

Implementation Traps: Mistakes with Chatbots and AI Agents That Cost You Pipeline

If you deploy a chatbot:

- Avoid the bot wall: Don't gate with email before delivering value. Keep required fields minimal. Provide escape hatches (talk to human, send summary, view docs).

- Don't over-branch: Too many decision trees create dead ends. Buyers get stuck or abandon.

- Test with real buyer questions: FAQ coverage isn't enough. Use actual questions from sales calls and see where the bot breaks.

If you deploy an agent:

- Treat it like a production system: Grounding, permissions, and logging are mandatory from day one, not "phase two."

- Start with constrained scope: One product line, one ICP segment. Expand after you've validated accuracy.

- Build a feedback loop: Flag bad answers, review weekly, retrain. Agents improve with attention; they degrade with neglect.

- Set escalation rules: Uncertainty should trigger clarifying questions or human routing, not confident-sounding hallucinations.

Key Questions to Ask Chatbot and AI Agent Vendors

Don't accept positioning language. Ask vendors to show evidence:

Bottom Line: When a Chatbot Is Enough and When You Need an AI Agent

The question isn't "chatbot or agent?" It's "what job does your website need to do when no rep is in the room?"

If that job is routing—pointing people to the right resource or team—a chatbot works fine.

If that job is evaluation—helping buyers figure out whether you're the right fit for their specific situation—you need an agent.

Buyers aren't waiting for sales to answer their hard questions. They're evaluating on your website right now, often outside business hours, often comparing you to competitors in real time. Your website either handles that moment or loses it.

Meet your buyers in their buying journey. Book a demo!